background:

DQN increased the training stability by breaking the RL problem into sequential supervised learning tasks. To do so, DQN introduces the concept of a target network and uses an Experience Replay buffer (ER)。

we address issues that arise from the combi- nation of Q-learning and function approximation.Thrun & Schwartz(1993) were first to investigate one of these issues which they have termed as theoverestimation phenomena. The max operator in Q-learning can lead to overestimation of state-action values in the presence of noise.Van Hasselt et al.(2015) suggest the Double-DQN that uses the Double Q-learning estimator (Van Hasselt,2010) method as a solu- tion to the problem. Additionally,Van Hasselt et al.(2015) showed that Q-learning overestimation do occur in practice。

改进:

This work suggests a different solution to the overestima- tion phenomena, named Averaged-DQN (Section3), based on averaging previously learned Q-values estimates. The averaging reduces the target approximation error variance (Sections4and5) which leads to stability and improved results.

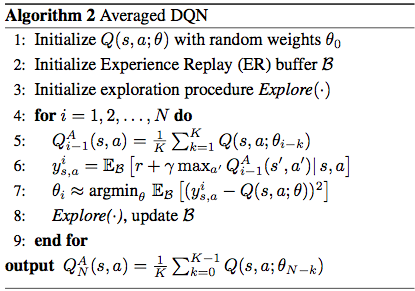

Averaged-DQN uses theKpreviously learned Q-values estimates to produce the cur- rent action-value estimate (line 5). The Averaged-DQN al- gorithm stabilizes the training process (see Figure1), by reducing the variance oftarget approximation error。

The computational effort com- pared to DQN is,K-fold more forward passes through a Q-network while minimizing the DQN loss (line 7). The number of back-propagation updates (which is the most de- manding computational element), remains the same as in DQN. The output of the algorithm is the average over the lastKpreviously learned Q-networks.